Von Neumann's principles of building an electronic computer. Von Neumann principles of building an electronic computer What are von Neumann principles

The first adding machine capable of performing four basic arithmetic operations was the adding machine of the famous French scientist and philosopher Blaise Pascal. The main element in it was a cogwheel, the invention of which was in itself a key event in the history of computing. I would like to note that the evolution in the field of computer technology is uneven, abrupt in nature: periods of accumulation of forces are replaced by breakthroughs in development, after which a period of stabilization begins, during which the achieved results are used practically and at the same time knowledge and strength are accumulated for the next leap forward. After each round, the process of evolution enters a new, higher level.

In 1671, the German philosopher and mathematician Gustav Leibniz also created an adding machine based on a gear wheel of a special design - the Leibniz gear wheel. Leibniz's adding machine, like the adding machines of its predecessors, performed four basic arithmetic operations. This is the end of this period, and for almost one and a half centuries mankind has been accumulating strength and knowledge for the next round of evolution of computing technology. The 18th and 19th centuries were a time when various sciences developed rapidly, including mathematics and astronomy. They often encountered tasks requiring lengthy and laborious computations.

Another famous person in the history of computing was the English mathematician Charles Babbage. In 1823, Babbage began to work on a machine for calculating polynomials, but, more interestingly, this machine had, in addition to directly performing calculations, produce results - print them on a negative plate for photographic printing. It was planned that the machine would be powered by a steam engine. Due to technical difficulties, Babbage was unable to complete his project. Here, for the first time, the idea arose to use some external (peripheral) device to output the results of calculations. Note that another scientist, Schoitz, in 1853, nevertheless realized the machine conceived by Babbage (it turned out even smaller than planned). Probably, Babbage liked the creative process of finding new ideas more than translating them into something material. In 1834, he outlined the principles of the operation of another machine, which he named "Analytic". Technical difficulties again prevented him from fully realizing his ideas. Babbage was able to bring the car only to the experimental stage. But it is the idea that is the engine of scientific and technological progress. Another car by Charles Babbage was the embodiment of the following ideas:

Manufacturing process management. The machine controlled the operation of the loom, changing the pattern of the fabric being created depending on the combination of holes on the special paper tape. This tape became the forerunner of such familiar media as punched cards and punched tapes.

Programmability. The operation of the machine was also controlled by a special paper tape with holes. The order of the holes on it determined the commands and the data processed by these commands. The machine had an arithmetic device and memory. The machine commands even included a conditional jump command that changed the course of calculations depending on some intermediate results.

Countess Ada Augusta Lovelace, who is considered the world's first programmer, took part in the development of this machine.

Charles Babbage's ideas were developed and used by other scientists. So, in 1890, at the turn of the 20th century, the American Herman Hollerith developed a machine that works with data tables (the first Excel?). The machine was controlled by a program on punched cards. It was used in the 1890 US census. Hollerith founded the predecessor firm of IBM in 1896. With the death of Babbage, there was another break in the evolution of computing technology up to the 30s of the XX century. In the future, the entire development of mankind became inconceivable without computers.

In 1938, the development center briefly shifts from America to Germany, where Konrad Zuse creates a machine that, unlike its predecessors, operates not with decimal numbers, but with binary numbers. This machine was also still mechanical, but its undoubted advantage was that it realized the idea of processing data in binary code. Continuing his work, Zuse in 1941 created an electromechanical machine, the arithmetic device of which was based on a relay. The machine knew how to perform floating point operations.

Overseas, in America, during this period, work was also underway to create similar electromechanical machines. In 1944, Howard Aiken designed a car called the Mark-1. She, like Zuse's machine, worked on a relay. But due to the fact that this machine was clearly influenced by Babbage's work, it operated on data in decimal form.

Naturally, due to the high proportion of mechanical parts, these machines were doomed.

Four generations of computers

By the end of the thirties of the XX century, the need for the automation of complex computational processes has greatly increased. This was facilitated by the rapid development of such industries as aircraft construction, atomic physics and others. From 1945 to the present day, computing has gone through 4 generations in its development:

First generation

First generation (1945-1954) - vacuum tube computers. This is prehistoric times, the era of the formation of computing. Most of the machines of the first generation were experimental devices and were built with the aim of testing certain theoretical positions. The weight and size of these computer dinosaurs, which often required separate buildings for themselves, have long been legendary.

Beginning in 1943, a group of specialists led by Howard Aitken, J. Mauchley and P. Eckert in the United States began to design a computer based on vacuum tubes rather than electromagnetic relays. This machine was named ENIAC (Electronic Numeral Integrator And Computer) and it ran a thousand times faster than the Mark-1. ENIAC contained 18 thousand vacuum tubes, covered an area of 9x15 meters, weighed 30 tons and consumed 150 kilowatts of power. ENIAC also had a significant drawback - it was controlled using a patch panel, it had no memory, and in order to set a program, it took several hours or even days to connect the wires in the right way. The worst of all the shortcomings was the terrifying unreliability of the computer, since about a dozen vacuum tubes managed to fail in a day of work.

To simplify the process of defining programs, Mauchly and Eckert began to design a new machine that could store the program in its memory. In 1945, the famous mathematician John von Neumann was involved in the work, who prepared a report on this machine. In this report, von Neumann clearly and simply formulated the general principles of the operation of universal computing devices, i.e. computers. This is the first operating machine built on vacuum tubes and was officially put into service on February 15, 1946. They tried to use this machine to solve some of the tasks prepared by von Neumann and related to the atomic bomb project. She was then transported to the Aberdeen Proving Grounds, where she worked until 1955.

ENIAC became the first representative of the 1st generation of computers. Any classification is conditional, but most experts agreed that generations should be distinguished based on the element base on the basis of which the machines are built. Thus, the first generation is represented by tube machines.

It is necessary to note the enormous role of the American mathematician von Neumann in the development of the first generation of technology. It was necessary to comprehend the strengths and weaknesses of ENIAC and provide recommendations for subsequent developments. In the report of von Neumann and his colleagues G. Goldstein and A. Burks (June 1946), the requirements for the structure of computers were clearly formulated. Many of the provisions of this report are called the Von Neumann Principles.

The first projects of domestic computers were proposed by S.A. Lebedev, B.I. Rameev in 1948. In 1949-51. designed by S.A. Lebedev, the MESM (small electronic calculating machine) was built. The first test run of the model of the machine took place in November 1950, and the machine was put into operation in 1951. MESM operated in a binary system, with a three-address command system, and the computation program was stored in an operational memory device. Lebedev's parallel word processing machine was a fundamentally new solution. It was one of the first computers in the world and the first on the European continent with a program stored in memory.

The 1st generation computer also includes BESM-1 (large electronic calculating machine), the development of which under the leadership of S.A. Lebedev was completed in 1952, it contained 5 thousand lamps, worked without interruptions for 10 hours. The performance reached 10 thousand operations per second (Appendix 1).

Almost simultaneously, the Strela computer (Appendix 2) was being designed under the direction of Yu. Bazilevsky, in 1953. it was put into production. Later, the Ural-1 computer appeared (Appendix 3), which laid the foundation for a large series of Ural machines, developed and introduced into production under the leadership of B.I. Rameeva. In 1958. The first generation M-20 computer was put into serial production (speed up to 20 thousand operations / s).

The computers of the first generation had a speed of several tens of thousands of operations per second. Ferrite cores were used as internal memory, and ALU and UU were built on electronic tubes. The speed of the computer was determined by a slower component - internal memory and this reduced the overall effect.

The first generation computer was focused on performing arithmetic operations. When trying to adapt to the tasks of analysis, they turned out to be ineffective.

There were no programming languages as such, and programmers used machine instructions or assemblers to code their algorithms. This complicated and delayed the programming process.

By the end of the 50s, programming tools underwent fundamental changes: a transition to automation of programming with the help of universal languages and standard program libraries was carried out. The use of universal languages gave rise to translators.

The programs were executed task by task, i.e. the operator had to follow the progress of the solution of the problem and, upon reaching the end, initiate the execution of the next task himself.

Second generation

In the second generation of computers (1955-1964), transistors were used instead of vacuum tubes, and magnetic cores and magnetic drums began to be used as memory devices - the distant ancestors of modern hard drives... All this made it possible to drastically reduce the size and cost of computers, which then began to be built for sale for the first time.

But the main achievements of this era belong to the field of programs. On the second generation of computers, for the first time, what is called the operating system today appeared. At the same time, the first high-level languages were developed - Fortran, Algol, Cobol. These two important improvements have made writing computer programs much easier and faster; programming, while remaining a science, acquires the features of a craft.

Accordingly, the scope of application of computers expanded. Now it was not only scientists who could count on access to computing; computers have found use in planning and management, and some large firms have even computerized their bookkeeping, anticipating fashion by twenty years.

Semiconductors became the element base of the second generation. Without a doubt, transistors can be considered one of the most impressive wonders of the 20th century.

The patent for the discovery of the transistor was issued in 1948 to the Americans D. Bardin and W. Brattain, and eight years later, together with the theoretician W. Shockley, they became Nobel Prize winners. The switching speeds of the very first transistor elements turned out to be hundreds of times higher than those of tube elements, and so did the reliability and efficiency. For the first time, memory on ferrite cores and thin magnetic films began to be widely used, inductive elements - parametrons - were tested.

The first onboard computer for installation on an intercontinental missile, the Atlas, entered service in the United States in 1955. The machine used 20,000 transistors and diodes and consumed 4 kilowatts. In 1961, Burrows' stretch ground computers controlled the space flights of Atlas rockets, and IBM machines controlled the flight of astronaut Gordon Cooper. Computer controlled flights of unmanned Ranger-class spacecraft to the Moon in 1964, as well as the Mariner spacecraft to Mars. Soviet computers performed similar functions.

In 1956, IBM developed floating magnetic hover heads. Their invention made it possible to create a new type of memory - disk storage devices, the significance of which was fully appreciated in the subsequent decades of the development of computer technology. The first storage devices on disks appeared in the IBM-305 and RAMAC machines (Appendix 4). The latter had a package consisting of 50 magnetically coated metal discs that rotated at a speed of 12,000 rpm. On the surface of the disc there were 100 tracks for recording data, 10,000 characters each.

The first serial general purpose computers based on transistors were released in 1958 simultaneously in the USA, Germany and Japan.

The first minicomputers appear (for example, PDP-8 (Appendix 5)).

In the Soviet Union, the first tubeless machines "Setun", "Hrazdan" and "Hrazdan-2" were created in 1959-1961. In the 60s, Soviet designers developed about 30 models of transistor computers, most of which were mass-produced. The most powerful of them - "Minsk-32" performed 65 thousand operations per second. Whole families of cars appeared: "Ural", "Minsk", BESM.

The BESM-6 (Appendix 6) became the record holder among the second generation computers, which had a speed of about a million operations per second - one of the most productive in the world. The architecture and many technical solutions in this computer were so progressive and ahead of their time that it was successfully used almost to our time.

Especially for the automation of engineering calculations at the Institute of Cybernetics of the Academy of Sciences of the Ukrainian SSR under the leadership of Academician V.M. Glushkov computers MIR (1966) and MIR-2 (1969) were developed. An important feature of the MIR-2 machine was the use of a television screen for visual control of information and a light pen, with which it was possible to correct the data directly on the screen.

The construction of such systems, which included about 100 thousand switching elements, would be simply impossible on the basis of lamp technology. Thus, the second generation was born in the depths of the first, adopting many of its features. However, by the mid-60s, the boom in transistor manufacturing reached its maximum - the market saturation occurred. The fact is that the assembly of electronic equipment was a very laborious and slow process that did not lend itself well to mechanization and automation. Thus, the conditions are ripe for the transition to a new technology that would make it possible to adapt to the growing complexity of circuits by eliminating traditional connections between their elements.

Third generation

Finally, in the third generation of computers (1965-1974), integrated circuits were first used - whole devices and nodes of tens and hundreds of transistors, made on a single semiconductor crystal (what is now called microcircuits). At the same time, semiconductor memory appears, which is used throughout the day in personal computers as operational memory. The priority in the invention of integrated circuits, which have become the element base of the third generation computer, belongs to the American scientists D. Kilby and R. Neuss, who made this discovery independently of each other. The mass production of integrated circuits began in 1962, and in 1964 the transition from discrete elements to integrated ones began rapidly. The aforementioned ENIAK, measuring 9x15 meters, in 1971 could be assembled on a plate of 1.5 square centimeters. The transformation of electronics into microelectronics began.

During these years, the production of computers takes on an industrial scale. The IBM company, which made its way into the leaders, was the first to implement a family of computers - a series of computers that are fully compatible with each other, from the smallest, the size of a small cabinet (they did not make it smaller then), to the most powerful and expensive models. The most widespread in those years was the System / 360 family from IBM, on the basis of which the ES computer series was developed in the USSR. In 1973, the first computer model of the EU series was released, and since 1975, the models ES-1012, ES-1032, ES-1033, ES-1022, and later the more powerful ES-1060, have appeared.

Within the framework of the third generation, a unique machine "ILLIAK-4" was built in the USA, in the initial version of which it was planned to use 256 data processing devices made on monolithic integrated circuits. Later, the project was changed due to the rather high cost (more than $ 16 million). The number of processors had to be reduced to 64, as well as the move to integrated circuits with a low degree of integration. The shortened version of the project was completed in 1972, the nominal speed of "ILLIAK-4" was 200 million operations per second. For almost a year, this computer held the record for computing speed.

Back in the early 60s, the first minicomputers appeared - small, low-power computers that were affordable for small firms or laboratories. Minicomputers represented the first step towards personal computers, prototypes of which were not released until the mid-1970s. The well-known family of PDP minicomputers from Digital Equipment served as a prototype for the Soviet series of SM machines.

Meanwhile, the number of elements and connections between them, which fit in one microcircuit, was constantly growing, and in the 70s, integrated circuits already contained thousands of transistors. This made it possible to combine most of the computer's components in a single small part - which Intel did in 1971 with the release of the first microprocessor, which was intended for just-appeared desktop calculators. This invention was destined to make a real revolution in the next decade - after all, the microprocessor is the heart and soul of our personal computer.

But that's not all - indeed, the turn of the 60s and 70s was a fateful time. In 1969, the first global computer network was born - the embryo of what we now call the Internet. And in the same 1969, the Unix operating system and the C programming language ("C") appeared at the same time, which had a huge impact on the software world and still retain their leading position.

Fourth generation

The next change in the element base led to a change in generations. In the 70s, work was actively underway to create large and very large integrated circuits (LSI and VLSI), which made it possible to place tens of thousands of elements on one crystal. This led to a further significant reduction in the size and cost of computers. The work with the software has become more user-friendly, which has led to an increase in the number of users.

In principle, with such a degree of integration of the elements, it became possible to try to create a functionally complete computer on a single crystal. Corresponding attempts were made, although they were met with mostly an incredulous smile. Probably, these smiles would decrease if it was possible to foresee that this very idea would become the cause of the extinction of mainframes in some fifteen years from now.

Nevertheless, in the early 70s, Intel released the 4004 microprocessor (MP). And if before that in the world of computing there were only three directions (supercomputers, mainframes and minicomputers), now one more was added to them - microprocessor. In the general case, a processor is understood as a functional block of a computer designed for logical and arithmetic processing of information based on the principle of microprogram control. In terms of hardware implementation, processors can be divided into microprocessors (all processor functions are fully integrated) and processors with small and medium integration. Structurally, this is expressed in the fact that microprocessors implement all the functions of a processor on one chip, and processors of other types implement them by connecting a large number of microcircuits.

So, the first 4004 microprocessor was created by Intel at the turn of the 70s. It was a 4-bit parallel computing device, and its capabilities were severely limited. The 4004 could perform four basic arithmetic operations and was initially used only in pocket calculators. Later, the scope of its application was expanded due to its use in various control systems (for example, to control traffic lights). Intel, having correctly foreseen the prospects of microprocessors, continued intensive development, and one of its projects ultimately led to a major success that predetermined the future path of development of computing technology.

It was a project to develop an 8-bit 8080 processor (1974). This microprocessor had a fairly developed command system and knew how to divide numbers. It was he who was used to create the Altair personal computer, for which the young Bill Gates wrote one of his first interpreters of the BASIC language. Probably, it is from this moment that the 5th generation should be counted.

Fifth generation

The transition to fifth-generation computers implied a transition to new architectures focused on the creation of artificial intelligence.

It was believed that the architecture of fifth-generation computers would contain two main blocks. One of them is the computer itself, in which communication with the user is carried out by a unit called "intelligent interface". The task of the interface is to understand a text written in a natural language or speech, and the task condition stated in this way to translate into a working program.

Basic requirements for computers of the 5th generation: Creation of a developed human-machine interface (speech, image recognition); Development of logical programming for the creation of knowledge bases and artificial intelligence systems; Creation of new technologies in the production of computer technology; Creation of new architectures of computers and computing systems.

The new technical capabilities of computer technology were supposed to expand the range of tasks to be solved and make it possible to move on to the tasks of creating artificial intelligence. Knowledge bases (databases) in various areas of science and technology are one of the components necessary for the creation of artificial intelligence. The creation and use of databases requires a high speed of the computing system and a large amount of memory. General-purpose computers are capable of high-speed computing, but are not suitable for high-speed comparison and sorting operations on large volumes of records, usually stored on magnetic disks. To create programs for filling, updating and working with databases, special object-oriented and logical programming languages have been created that provide the greatest opportunities in comparison with conventional procedural languages. The structure of these languages requires a transition from the traditional von Neumann computer architecture to architectures that take into account the requirements of the tasks of creating artificial intelligence.

The class of supercomputers includes computers that have the maximum performance at the time of their release, or the so-called computers of the 5th generation.

The first supercomputers appeared already among the computers of the second generation (1955 - 1964, see computers of the second generation), they were designed to solve complex problems that required high computation speed. These are LARC from UNIVAC, Stretch from IBM and CDC-6600 (CYBER family) from Control Data Corporation, they used parallel processing methods (increasing the number of operations performed per unit of time), command pipelining (when during the execution of one command the second is read from memory and is prepared for execution) and parallel processing using a complex processor consisting of a matrix of data processors and a special control processor that distributes tasks and controls the data flow in the system. Computers that execute several programs in parallel using several microprocessors are called multiprocessor systems. Until the mid-1980s, Sperry Univac and Burroughs were on the list of the largest supercomputer manufacturers in the world. The first is known, in particular, for its mainframes UNIVAC-1108 and UNIVAC-1110, which were widely used in universities and government organizations.

Following the merger of Sperry Univac and Burroughs, the combined firm UNISYS continued to support both mainframe lines while maintaining upward compatibility in each. This is clear evidence of the immutable rule that supported the development of mainframes - the preservation of the performance of previously developed software.

Intel is also famous in the world of supercomputers. Intel's Paragon multiprocessor computers in the family of distributed memory multiprocessor structures have become the same classics.

Von Neumann principles

In 1946, D. von Neumann, G. Goldstein and A. Berks in their joint article outlined new principles for the construction and operation of computers. Subsequently, the first two generations of computers were produced on the basis of these principles. In later generations, some changes took place, although Neumann's principles are still valid today. In fact, Neumann managed to generalize the scientific developments and discoveries of many other scientists and formulate fundamentally new principles on their basis:

1. The principle of representation and storage of numbers.

A binary number system is used to represent and store numbers. The advantage over the decimal number system is that the bit is easy to implement, the memory on large bits is quite cheap, the devices can be made quite simple, arithmetic and logical operations in the binary number system are also quite simple.

2. The principle of computer programmed control.

The work of the computer is controlled by a program consisting of a set of instructions. The commands are executed sequentially one after another. The commands process data stored in the computer's memory.

3. The principle of a stored program.

Computer memory is used not only for storing data, but also for programs. In this case, both program commands and data are encoded in a binary number system, i.e. their writing method is the same. Therefore, in certain situations, you can perform the same actions on commands as on data.

4. The principle of direct memory access.

The cells of the main memory of the computer have sequentially numbered addresses. At any time, you can refer to any memory cell by its address.

5. The principle of branching and cyclic calculations.

Conditional jump instructions allow you to jump to any part of the code, thereby providing the ability to branch and re-execute some parts of the program.

The most important consequence of these principles can be called the fact that now the program was no longer a permanent part of the machine (like a calculator). The program has become easy to change. But the equipment, of course, remains unchanged, and very simple. For comparison, the ENIAC computer program (where there was no program stored in the memory) was determined by special jumpers on the panel. It could take more than one day to reprogram the machine (set the jumpers differently).

And although programs for modern computers can take months to develop, their installation (installation on a computer) takes several minutes even for large programs. Such a program can be installed on millions of computers, and work on each of them for years.

Applications

Annex 1

Appendix 2

Computer "Ural"

Appendix 3

Computer "Strela"

Appendix 4

IBM-305 and RAMAC

Appendix 5

mini-computer PDP-8

Appendix 6

Literature:

1) Broido V.L. Computing systems, networks and telecommunications. Textbook for universities. 2nd ed. - SPb .: Peter, 2004

2) Zhmakin A.P. Computer architecture. - SPb .: BHV - Petersburg, 2006

3) Semenenko V.A. and other Electronic computers. Textbook for vocational schools - M .: Higher school, 1991

In 1946, D. von Neumann, G. Goldstein and A. Berks in their joint article outlined new principles for the construction and operation of computers. In the future, based on these principles, the first two generations of computers were produced. In later generations, some changes took place, although Neumann's principles are still valid today.

In fact, Neumann managed to generalize the scientific developments and discoveries of many other scientists and formulate fundamentally new things on their basis.

Principle of programmed control: a program consists of a set of instructions executed by a processor in a specific sequence.

The principle of memory homogeneity: programs and data are stored in the same memory.

Targeting principle: structurally, the main memory consists of numbered cells. Any cell is available to the processor at any time.

Computers built on the listed principles are of the von Neumann type.

The most important consequence of these principles can be called the fact that now the program was no longer a permanent part of the machine (like a calculator). The program has become easy to change. For comparison, the ENIAC computer program (where there was no program stored in the memory) was determined by special jumpers on the panel. It could take more than one day to reprogram the machine (set the jumpers differently). And although programs for modern computers can take years to write, they run on millions of computers, installing programs does not require significant time.

In addition to the above three principles, von Neumann proposed the binary coding principle - to represent data and commands, a binary number system is used (the first machines used a decimal number system). But subsequent developments have shown the possibility of using non-traditional number systems.

At the beginning of 1956, on the initiative of Academician S.L. Sobolev, head of the department of computational mathematics at the Faculty of Mechanics and Mathematics of Moscow University, an electronics department was established in the computer center of Moscow State University and a seminar began to work with the aim of creating a practical example of a digital computer intended for use in universities, as well as in laboratories and design bureaus of industrial enterprises. ... It was required to develop a small computer, easy to learn and use, reliable, inexpensive and at the same time effective in wide range tasks. A thorough study during the year of the computers available at that time and the technical possibilities of their implementation led to a non-standard decision to use not a binary, but a ternary symmetric code in the machine being created, implementing a balanced number system, which D. Knuth twenty years later will call perhaps the most elegant and as it later became known, the merits of which were revealed by K. Shannon in 1950. Unlike the binary code with the digits 0, 1 generally accepted in modern computers, which is arithmetically defective due to the impossibility of directly representing negative numbers in it, the ternary code with the digits -1, 0, 1 provides an optimal construction of the arithmetic of signed numbers. The ternary number system is based on the same positional principle of coding numbers as the binary system adopted in modern computers, but the weight i-th position (bit) in it is not equal to 2 i, but 3 i. In this case, the digits themselves are not two-digit (not bits), but three-digit (trites) - in addition to 0 and 1, they admit a third value, which is -1 in a symmetric system, due to which both positive and negative numbers are uniformly represented. The value of an n-bit integer N is defined similarly to the value of an n-bit integer:

where a i ∈ (1, 0, -1) is the value of the i-th digit.

In April 1960, interdepartmental tests of a prototype computer named "Setun" were carried out. According to the results of these tests, "Setun" was recognized as the first operating model of a universal computer on lampless elements, which is characterized by "high performance, sufficient reliability, small dimensions and ease of maintenance. ”Due to the naturalness of the ternary symmetric code,“ Setun ”turned out to be a truly universal, easily programmable and highly efficient computing tool, which has positively proven itself, in particular, as a technical means of teaching computational mathematics in more than thirty universities. And at the Air Force Engineering Academy. Zhukovsky, it was at Setun that an automated computer training system was first implemented.

According to von Neumann principles, a computer consists of:

· arithmetic logic unit - ALU(English ALU, Arithmetic and Logic Unit), which performs arithmetic and logical operations; control device -UU, designed to organize the execution of programs;

· storage devices (memory), incl. random access memory (RAM - primary memory) and external storage device (OVC); in about main memory data and programs are stored; the memory module consists of many numbered cells, a binary number can be written to each cell, which is interpreted either as a command or as data;

· at input-output devices, which are used to transfer data between a computer and an external environment consisting of various peripheral devices, which include secondary memory, communication equipment and terminals.

Provides interaction between the processor (ALU and CU), main memory and input-output devices with system bus .

Von Neumann's computer architecture is considered classical, and most computers are built on it. In general, when talking about the von Neumann architecture, they mean the physical separation of the processor module from the storage devices for programs and data. The idea of storing computer programs in shared memory made it possible to turn computers into versatile devices capable of performing a wide range of tasks. Programs and data are entered into memory from an input device through an arithmetic logic device. All program commands are written into adjacent memory cells, and data for processing can be contained in arbitrary cells. For any program, the last command must be a shutdown command.

The overwhelming majority of computers today are von Neumann machines. The only exceptions are certain types of systems for parallel computing, in which there is no instruction counter, the classical concept of a variable is not implemented, and there are other significant fundamental differences from the classical model (examples are streaming and reduction computers). Apparently, a significant deviation from the von Neumann architecture will occur as a result of the development of the idea of fifth-generation machines, in which information processing is based not on calculations, but on logical conclusions.

2.2 Command, command formats

A command is a description of an elementary operation that a computer must perform.

Team structure.

The number of bits that are allocated for recording a command depends on the hardware of a particular computer model. In this regard, the structure of a specific team will be considered for the general case.

In general, the command contains the following information:

Ø code of the operation being performed;

Ø instructions for defining operands or their addresses;

Ø instructions on the placement of the result obtained.

For any particular machine, the number of bits allocated in the command for each of its addresses and for the opcode must be specified, as well as the actual opcodes themselves. The number of binary bits in the command, allotted during the construction of the machine for each of its addresses, determines the upper bound on the number of memory cells of the machine with separate addresses: if the address in the command is represented using n binary bits, then the memory with fast access cannot contain more than 2 n cells.

The commands are executed sequentially, starting from the starting address (entry point) of the executable program, the address of each next command is one more than the address of the previous command, if it was not a jump command.

In modern machines, the length of commands is variable (usually from two to four bytes), and the ways of specifying the addresses of variables are quite varied.

The address part of the command may contain, for example:

Operand;

Operand address;

The address of the operand address (byte number from which the operand address is located), etc.

Let's consider the structure of possible variants of several types of commands.

Three-address commands.

Two-address commands.

Unicast commands.

Unaddressed commands.

Consider a binary addition operation: c = a + b.

For each variable in memory, we define conditional addresses:

Let 53 be the code of the addition operation.

In this case, the structure of the three-address command looks like this:

· Three-address commands.

The command execution process is divided into the following stages:

The next command is selected from the memory cell, the address of which is stored in the command counter; the content of the counter changes and now contains the address of the next command in order;

The selected command is transferred to the control unit on the command register;

The control unit decrypts the address field of the command;

According to the UU signals, the values of the operands are read from memory and written to the ALU on the special registers of the operands;

UU decrypts the operation code and sends a signal to the ALU to perform the corresponding operation on the data;

In this case, the result of the operation is sent to memory (in unicast and two-address computers it remains in the processor);

All previous actions are performed until the STOP command is reached.

2.3 the computer as an automaton

"Electronic digital machines with programmed control are an example of one of the most widespread types of converters of discrete information, called discrete or digital automata" (Glushkov V.M. Synthesis of digital automata)

Any computer works automatically (whether it is a large or small computer, a personal computer or a super-computer). In this sense, a computing machine as an automaton can be described by the block diagram shown in Fig. 2.1.

In the previous paragraphs, the block diagram of a computer was considered. Based on the structural diagram of the computer and the circuit of the automaton, we can compare the blocks of the circuit of the automaton and the elements of the structural diagram of the computer.

As executive elements, the machine includes:

Arithmetic logic unit:

· memory;

· Information input-output devices.

The control element of the machine is a control device that actually provides an automatic mode of operation. As already noted, in modern computing devices, the main executive element is a processor or microprocessor, which contains an ALU, a memory, and a control device.

Auxiliary devices of the machine can be all kinds of additional means that improve or expand the capabilities of the machine.

In 1946, three scientists - Arthur Burks, Hermann Goldstein, and John von Neumann - published an article "Preliminary Consideration of the Logical Design of Electronic Computing Devices." The article substantiated the use of a binary system for representing data in a computer (mainly for technical implementation, the ease of performing arithmetic and logical operations - before that machines stored data in decimal form), the idea of using shared memory for programs and data was put forward. The name of von Neumann was widely known in the science of that time, which overshadowed his co-authors, and these ideas were called "von Neumann principles."

Binary coding principle

According to this principle, all information entering a computer is encoded using binary signals (binary digits, bits) and divided into units called words.

The principle of memory homogeneity

Programs and data are stored in the same memory. Therefore, the computer does not distinguish between what is stored in a given memory cell - a number, a text or a command. You can perform the same actions on commands as on data.

The principle of memory addressability

Structurally, the main memory consists of numbered cells; any cell is available to the processor at any time. Hence, it is possible to give names to memory areas, so that the values stored in them could be subsequently accessed or changed during program execution using the assigned names.

The principle of sequential program control

Assumes that the program consists of a set of instructions that are executed by the processor automatically one after the other in a specific sequence.

The principle of rigidity of architecture

Immutability in the process of work topology, architecture, list of commands.

Computers built on these principles are classified as von Neumann's.

The computer must have:

- arithmetic logic unit that performs arithmetic and logical operations. Nowadays, this device is called a central processing unit. CPU(central processing unit) - a computer microprocessor, which is a microcircuit that controls all processes taking place in a computer;

- control device, which organizes the process of executing programs. In modern computers, the arithmetic logic unit and the control unit are combined into a central processor;

- Memory device(memory) for storing programs and data;

- external devices for input-output of information.

- With the help of an external device, a program is entered into the computer's memory.

- The control unit reads the contents of the memory cell where the first instruction (command) of the program is located and organizes its execution. The command can set:

- performing logical or arithmetic operations;

- reading data from memory to perform arithmetic or logical operations;

- recording results into memory;

- input of data from an external device to memory;

- data output from memory to an external device.

- The control unit starts executing the command from a memory location immediately behind the command just executed. However, this order can be changed using control transfer (jump) commands. These commands indicate to the control device that it needs to continue the execution of the program, starting from the command contained in another memory location.

- The results of the program execution are output to an external computer device.

- The computer goes into standby mode for a signal from an external device.

- basic electronic board(system, parent), which contains those blocks that process the calculation information;

- circuits that control other computer devices plugged into standard connectors on the system board - slots;

- information storage disks;

- a power supply unit from which power is supplied to all electronic circuits;

- chassis (system unit), in which all the internal devices of the computer are installed on a common frame;

- keyboards;

- monitor;

- other external devices.

Computers built on von Neumann principles

In the mid-1940s, a project for a computer that stores its programs in shared memory was developed at the Moore School of Electrical Engineering. Moore School of Electrical Engineering ) at Pennsylvania State University. The approach described in this document came to be known as the von Neumann architecture, after the only named author of the project, John von Neumann, when in fact the authorship of the project was collective. The von Neumann architecture solved the problems inherent in the ENIAC computer, which was being created at that time, by storing the computer's program in its own memory. Information about the project became available to other researchers shortly after

Computer memory represents a number of numbered cells, each of which can contain either processed data or program instructions. All memory cells should be equally easily accessible to other computer devices.

Principle of operation:

One of the principles " Von Neumann architecture"reads: the computer does not have to change the wire connections if all instructions are stored in its memory... And as soon as this idea was put into practice within the framework of the “von Neumann architecture”, modern computer.

Like any technology, computers have evolved towards increasing functionality, feasibility and beauty. In general, there is a statement that claims to be a law: a perfect device cannot be ugly in appearance, and vice versa, a beautiful technique is never bad. The computer becomes not only useful, but also a device decorating the room. The appearance of a modern computer, of course, correlates with the von Neumann scheme, but at the same time differs from it.

Thanks to IBM, von Neumann's ideas were realized in the form of the widespread principle of the open architecture of computer system units. According to this principle, a computer is not a single one-piece device, but consists of independently manufactured parts, and the methods for pairing devices with a computer are not a secret of the manufacturer, but are available to everyone. Thus, system blocks can be assembled according to the principle of a children's designer, that is, to change parts for other, more powerful and modern ones, upgrading your computer (upgrade, upgrade - "raise the level"). New parts are completely interchangeable with old ones. "Open architectural" personal computers are also made by the system bus, it is a kind of virtual common road or vein, or channel, into which all outputs from all nodes and parts of the system unit go out. I must say that large computers (not personal) do not have the property of openness, they cannot simply be replaced with something else, more perfect, for example, in the most modern computers even connecting wires may be missing between the elements of a computer system: a mouse, a keyboard (" keyboard "-" keyboard board ") and the system unit. They can communicate with each other using infrared radiation, for this in the system unit there is a special window for receiving infrared signals (like a TV remote control).

Currently normal Personal Computer is a complex consisting of:

At the age of eight, John von Neumann already mastered the basics of higher mathematics and several foreign and classical languages. After graduating from the University of Budapest in 1926, von Neumann taught in Germany, and in 1930 he emigrated to the United States and became a fellow at the Princeton Institute for Advanced Study.

In 1944, von Neumann and the economist O. Morgenstern wrote the book Game Theory and Economic Behavior. This book contains not only mathematical game theory, but its applications to economics, military and other sciences. John von Neumann was assigned to the ENIAC development team by the math consultant with whom the team met.

In 1946, together with G. Goldstein and A. Burks, he wrote and released a report "Preliminary discussion of the logical design of an electronic computer." Since the name of von Neumann as an outstanding physicist and mathematician was already well known in wide scientific circles, all the statements made in the report were attributed to him. Moreover, the architecture of the first two generations of computers with sequential execution of instructions in the program was called the "von Neumann architecture of computers".

1. The principle of programmed control

This principle ensures the automation of computing processes on a computer.

The program consists of a set of instructions that are executed by the processor automatically one after the other in a specific sequence. Fetching a program from memory is carried out using an instruction counter. This processor register sequentially increases the address of the next instruction stored in it by the instruction length. Since the instructions of the program are located in memory one after another, thereby the selection of a chain of instructions from sequentially located memory cells is organized. If, after the execution of the command, it is necessary to go not to the next, but to some other, the commands of conditional or unconditional jumps are used, which enter the number of the memory cell containing the next command into the command counter. Fetching commands from memory stops after reaching and executing the command “stop”. Thus, the processor executes the program automatically, without human intervention.

2. The principle of homogeneity of memory

The absence of a fundamental difference between the program and the data made it possible for the computer to form a program for itself in accordance with the result of calculations.

Programs and data are stored in the same memory. Therefore, the computer does not distinguish between what is stored in a given memory location - a number, a text, or a command. You can perform the same actions on commands as on data. This opens up a whole range of possibilities. For example, a program in the course of its execution can also undergo processing, which makes it possible to set rules in the program itself for obtaining some of its parts (this is how the execution of loops and subroutines is organized in the program). Moreover, the instructions of one program can be received as the results of the execution of another program. The translation methods are based on this principle - translation of the program text from a high-level programming language into the language of a specific machine.

3. The principle of targeting

Structurally, the main memory consists of renumbered cells. Any cell is available to the processor at any time. Hence, it is possible to give names to memory areas, so that the values stored in them can be subsequently accessed or changed during the execution of programs using the assigned names.

Von Neumann described what a computer should be in order for it to be a versatile and convenient tool for processing information. First of all, he must have the following devices:

- Arithmetic-logical unit that performs arithmetic and logical operations Control unit that organizes the process of executing programs Memory device for storing programs and data External devices for input-output information.

Computers built on these principles are classified as von Neumann's.

Today it is the overwhelming majority of computers, including IBM PC, compatible. But there are also computer systems with a different architecture - for example, systems for parallel computing.

Main-modular principle of computer construction

The architecture of a computer is understood as its logical organization, structure, resources, that is, the means of a computing system. The architecture of modern PCs is based on the trunk-modular principle.

The modular principle allows the consumer to choose the desired computer configuration and, if necessary, upgrade it. The modular organization of the system is based on the trunk (bus) principle of information exchange. A backbone or system bus is a set of electronic lines that link together the processor, memory, and peripherals in memory addressing, data transfer, and service signals.

The exchange of information between individual computer devices is carried out over three multi-bit buses connecting all modules - the data bus, the address bus and the control bus.

The connection of individual computer modules to the backbone at the physical level is carried out using controllers, and at the software level it is provided by drivers. The controller receives the signal from the processor and decodes it so that the corresponding device can receive this signal and respond to it. The processor is not responsible for the reaction of the device - this is a function of the controller. Therefore, external computer devices are replaceable, and the set of such modules is arbitrary.

The bit width of the data bus is set by the bit width of the processor, that is, the number of binary bits that the processor processes in one clock cycle.

Data on the data bus can be transferred both from the processor to any device, and in the opposite direction, that is, the data bus is bi-directional. The main operating modes of the processor using the data transfer bus include the following: writing / reading data from RAM and from external storage devices, reading data from input devices, sending data to output devices.

The choice of the subscriber for data exchange is made by the processor, which generates the address code of this device, and for RAM - the address code of the memory cell. The address code is transmitted over the address bus, and signals are transmitted in one direction, from the processor to the devices, that is, this bus is unidirectional.

The control bus transmits signals that determine the nature of the exchange of information, and signals that synchronize the interaction of devices participating in the exchange of information.

External devices are connected to the buses via an interface. An interface is understood as a set of various characteristics of a PC peripheral device, which determine the organization of information exchange between it and the central processor. In case of incompatibility of interfaces (for example, system bus interface and hard drive interface), controllers are used.

To enable the devices that make up the computer to communicate with the central processor, IBM-compatible computers are provided with an interrupts system. The interrupt system allows the computer to pause the current activity and switch to others in response to an incoming request, for example, a key press on the keyboard. Indeed, on the one hand, it is desirable for the computer to be busy with the work assigned to it, and on the other, its immediate response to any request requiring attention is required. Interrupts provide an immediate response from the system.

The progress of computer technology is proceeding by leaps and bounds. New processors, boards, drives and other peripherals appear every year. The growth of the potential of the PC and the emergence of new, more productive components inevitably makes you want to upgrade your computer. However, it is impossible to fully appreciate the new achievements of computer technology without comparing them with existing standards.

PC development is always based on old standards and principles. Therefore, their knowledge is a fundamental factor for (or against) the choice of a new system.

The computer includes the following components:

- central processing unit (CPU); RAM(memory);

- storage devices (storage devices);

- input devices;

- output devices;

- communication devices.

State educational institution

higher professional education of the Tyumen region

TYUMEN STATE ACADEMY

WORLD ECONOMY, GOVERNANCE AND RIGHTS

Department of Mathematics and Informatics

by discipline

"COMPUTER SYSTEMS, NETWORKS AND TELECOMMUNICATIONS"

"VON NEUMAN'S PRINCIPLES"

1. Introduction …………………………………………………………… .... 2

2. The basic principles of architecture by John von Neumann …………… .3

3. Computer structure ……………………………………………………… 3

4. How John von Neumann's machine works ………………………… ... 4

5. Conclusion ………………………………………………………… ... 6

References ……………………………………………………… ... 8

Introduction

Since the mid-60s, the approach to the creation of computers has changed dramatically. Instead of developing hardware and mathematical support, a system began to be designed, consisting of a synthesis of hardware and software. At the same time, the concept of interaction came to the fore. This is how a new concept arose - computer architecture.

Under the architecture of a computer, it is customary to understand a set of general principles of organizing hardware and software tools and their main characteristics, which determines the functionality of the computer when solving the corresponding types of problems.

Computer architecture covers a significant range of problems associated with the creation of a complex of hardware and software and taking into account a large number of determining factors. Among these factors, the main ones are: cost, scope, functionality, ease of use, and hardware is considered one of the main components of the architecture.

The architecture of the computing facility must be distinguished from the structure, since the structure of the computing facility determines its current composition at a certain level of detail and describes the connections within the facility. The architecture, on the other hand, defines the basic rules for the interaction of the constituent elements of a computing facility, the description of which is carried out to the extent necessary to form the rules of interaction. It does not establish all the connections, but only the most necessary ones, which must be known for a more competent use of the tool used.

So, a computer user does not care what elements electronic circuits are made on, commands are executed in circuit or software, and the like. Computer architecture really reflects a range of problems that relate to the general design and construction of computers and their software.

The architecture of a computer includes both a structure that reflects the composition of a PC, and software and mathematical support. The structure of a computer is a set of elements and connections between them. The basic principle of construction of all modern computers is software control.

The foundations of the theory of computer architecture were laid by John von Neumann. The combination of these principles gave rise to the classical (von Neumann) computer architecture.

Basic principles of architecture by John von Neumann

John von Neumann (1903 - 1957) is an American mathematician who made a great contribution to the creation of the first computers and the development of methods for their application. It was he who laid the foundations for the doctrine of the architecture of computers, having joined the creation of the world's first tube computer ENIAC in 1944, when its design had already been selected. In the process of work, during numerous discussions with his colleagues G. Goldstein and A. Berks, John von Neumann expressed the idea of a fundamentally new computer. In 1946, scientists outlined their principles of building computers in the now classic article "Preliminary consideration of the logical design of an electronic computing device." More than half a century has passed since then, but the provisions put forward in it remain relevant today.

The article convincingly substantiates the use of a binary system for representing numbers, because earlier all computers stored processed numbers in decimal form. The authors demonstrated the advantages of a binary system for technical implementation, convenience and simplicity of performing arithmetic and logical operations in it. In the future, computers began to process non-numerical types of information - text, graphic, sound and others, but the binary coding of data still forms the information basis of any modern computer.

Another revolutionary idea, the importance of which can hardly be overestimated, is the principle of "stored program" proposed by Neumann. Initially, the program was set by setting jumpers on a special patch panel. This was a very time-consuming task: for example, it took several days to change the program of the ENIAC machine, while the actual calculation could not last more than a few minutes - there were a huge number of lamps out of order. Neumann was the first to guess that a program can also be stored as a set of zeros and ones, and in the same memory as the numbers it processes. The absence of a fundamental difference between the program and the data made it possible for the computer itself to form a program for itself in accordance with the results of calculations.

Computer structure

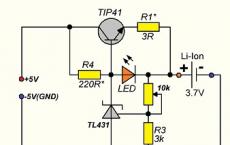

John von Neumann not only put forward the fundamental principles of the logical device of a computer, but also proposed its structure, which was reproduced during the first two generations of computers. According to Neumann, the main blocks are a control unit (CU) and an arithmetic logic unit (ALU), usually combined into a central processor, which also includes a set of general-purpose registers (RON) - for intermediate storage of information during its processing; memory, external memory, input and output devices. It should be noted that external memory differs from input and output devices in that data is entered into it in a form convenient for a computer, but inaccessible for direct human perception.

Computer architecture based on the principles of John von Neumann.

Solid lines with arrows indicate the direction of information flows, dashed lines - control signals.

How John von Neumann's machine works

Now let's talk in more detail about how a machine built on this architecture works. A von Neumann machine consists of a storage device (memory) - memory, an arithmetic-logical device - ALU, a control device - UU, as well as input and output devices, which can be seen in their diagrams and as discussed earlier.

Programs and data are entered into memory from an input device through an arithmetic logic device. All program commands are written into adjacent memory cells, and data for processing can be contained in arbitrary cells. For any program, the last command must be a shutdown command.

The command consists of specifying what operation should be performed and the addresses of the memory cells where the data is stored on which the specified operation should be performed, as well as the address of the cell where the result should be written if it is required to be stored in the memory.

The arithmetic logic unit performs the operations indicated by the commands on the indicated data. From it, the results are output to memory or an output device.

The Control Unit (CU) controls all parts of the computer. It sends signals to other devices “what to do”, and from other devices the CU receives information about their status. It contains a special register (cell) called the "command counter". After loading the program and data into the memory, the address of the first command of the program is written into the command counter, and the CU reads from the memory the contents of the memory cell, the address of which is in the command counter, and places it in a special device - "Command register". UU defines the operation of the command, "marks" in memory the data, the addresses of which are indicated in the command, and controls the execution of the command.

ALU - provides arithmetic and logical processing of two variables, as a result of which the output variable is formed. ALU functions are usually reduced to simple arithmetic and logical operations and shift operations. It also generates a number of result attributes (flags) that characterize the result obtained and the events that occurred as a result of its receipt (equality to zero, sign, parity, overflow). Flags can be analyzed by the UU in order to make a decision on the further sequence of command execution.

As a result of the execution of any command, the command counter changes by one and, therefore, points to the next command in the program. When it is required to execute a command that is not next in order to the current one, but spaced from the given one by a certain number of addresses, then a special jump command contains the address of the cell where control is required to be transferred.

Conclusion

So, let us highlight once again the basic principles proposed by von Neumann:

· The principle of binary coding. A binary number system is used to represent data and commands.

· The principle of memory homogeneity. Both programs (instructions) and data are stored in the same memory (and encoded in the same number system - most often binary). You can perform the same actions on commands as on data.

· The principle of memory addressability. Structurally, the main memory consists of numbered cells; any cell is available to the processor at any time.